Audio Data Services

Speech and audio data power the next generation of AI applications. From voice assistants and transcription engines to multimodal LLMs and generative speech models, building robust systems requires data that reflects the complexity of real-world communication.

With 25+ years of expertise and a crowd of over 1M vetted contributor in 500+ languages and dialects, Appen provides the end-to-end AI training data solutions that global model builders and enterprises rely on to train, test, and deploy at scale.

What is Audio Data for AI?

Audio data fuels the training and evaluation of AI models that listen and speak. It spans speech-to-text (STT), automatic speech recognition (ASR), text-to-speech (TTS), and non-speech event detection. High-quality multilingual AI datasets help models:

Understand disfluent, spontaneous, and code-switched speech

Recognize diverse accents and dialects

Generate expressive, context-aware spoken responses

Handle real-world noise and non-verbal audio events

Types of Speech Models

Appen delivers tailored audio datasets that enable models to perform reliably across diverse users, languages, and environments.

- Speech-to-Text (STT) Models: Transcribe and annotate speech for dictation, virtual assistants, video captioning, and meeting transcriptions.

- Text-to-Speech (TTS) Models: Convert text into natural, human-like speech for applications like virtual assistants, audiobook and podcast narration, and assistive tools for the visually impaired.

- Audio Classification Models: Categorize speech clips into defined groups (e.g., wake words for virtual assistants or keyword spotting for call center operations).

Why is Audio Data Important?

High-quality audio data is essential for accurate AI performance in voice-driven and multimodal systems.

- Enhance Model Accuracy: Diverse and annotated audio improves ASR and TTS models, reducing word error rates and improving speech synthesis.

- Increase Efficiency: Well-structured datasets accelerate training, saving time and compute resources.

- Expand Global Reach: Multilingual speech data ensures your AI can engage users across geographies and cultural contexts.

- Tackle Edge Cases: Specialized data for low-resource languages, code-switching, and noisy conditions ensures robustness in real-world use.

Types of Audio Data Services

Appen supports the entire speech-AI development lifecycle with modular services you can tailor to your needs.

Data Collection

Onsite and remote AI data collection of scripted and spontaneous speech across diverse languages, demographics, domains, and environments.

Transcription

Linguistically accurate transcription with rich metadata (e.g., timestamps, speaker identity, emotions, descriptive tokens) to support multilingual STT and ASR training.

Translation and Localization

Translate and localize audio data to reflect cultural and dialectical variation worldwide, ensuring inclusive multilingual LLM translation and user experiences.

Data Annotation

Train your model with high-fidelity datasets including diverse native speakers, devices, and environments with custom audio data annotation services for your use case.

Model Evaluation

Robust LLM evaluation and benchmarking of TTS and ASR outputs to refine multilingual voice quality, realism, and overall accuracy.

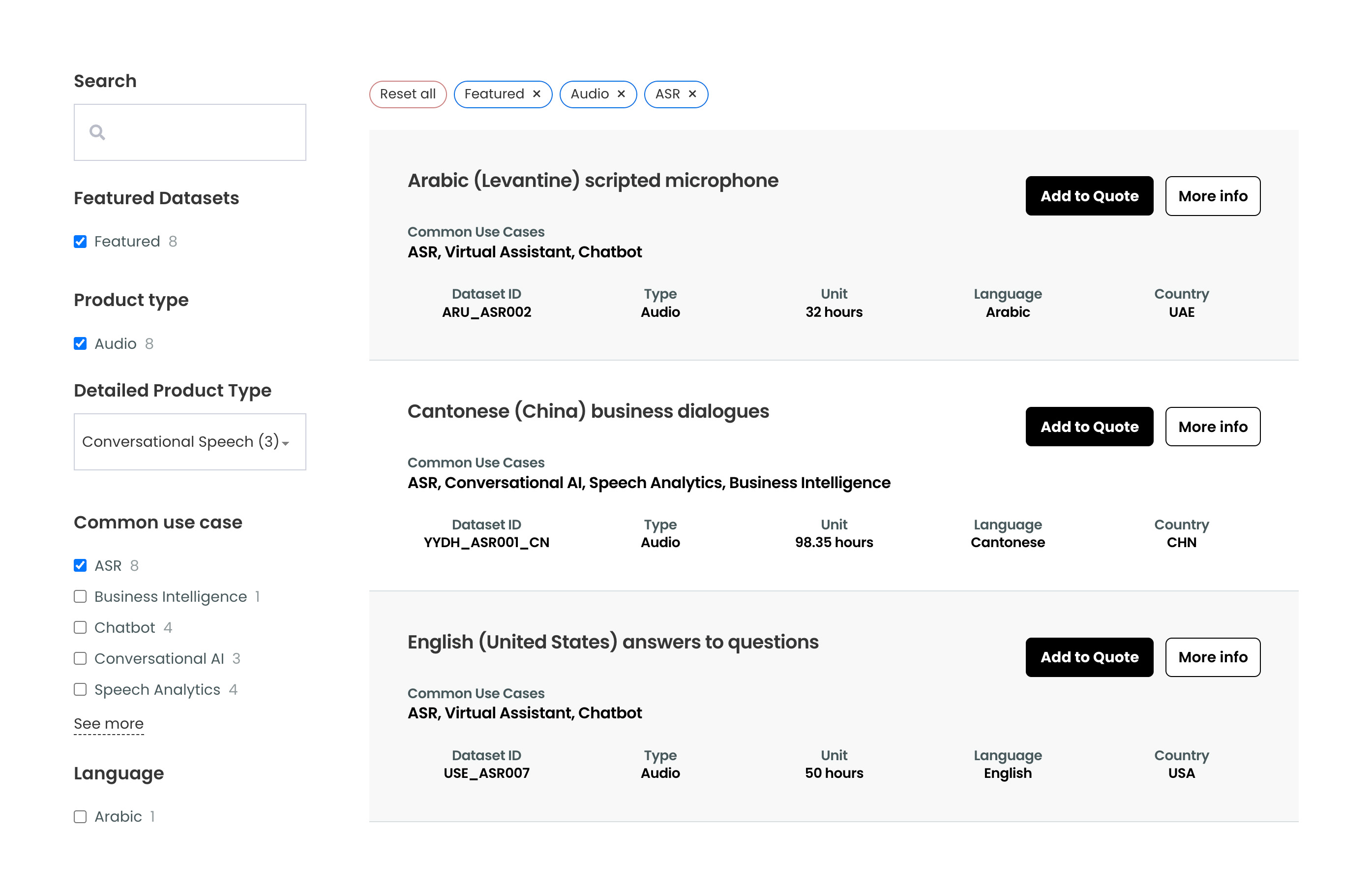

Off-the-Shelf Multilingual Audio Datasets

Accelerate your project with Appen’s 320+ pre-built audio datasets covering 80+ languages. Access 13,000+ hours of annotated speech – including scripted prompts, spontaneous conversation, pronunciation dictionaries, and noisy recordings. These datasets are ready for training, benchmarking, or zero-shot evaluation.

Audio Data in Action

Expanding Multilingual ASR for a Global Platform

Appen transcribed 165,000+ hours of audio across 150 locales, achieving 99.5% accuracy and enabling global voice recognition coverage.

Improving Drive-Thru Speech Recognition

We annotated bilingual, noisy drive-through audio (English/Spanish), boosting quality scores to 98%+ in English and 95%+ in Spanish.

Enhancing Name Recognition in Voice Systems

Appen collected 1.5M+ spoken name utterances across 14 markets, improving proper noun accuracy for a major tech company’s ASR/TTS models.

Creating Emotionally Expressive TTS in Chinese

By recording and annotating 30+ hours of emotional speech across 13 emotions, Appen enabled a Chinese voice assistant to generate natural, emotionally adaptive speech.

Telephony data collection for low resource languages

Delivered ~550 hours of high-quality telephony data & transcriptions for 10 low-resource, low-standardization languages to support speech model training

Start Your Audio Data Project

With deep expertise in speech and audio data services, Appen is uniquely positioned to help you train accurate, inclusive, and production-ready AI models. Whether you need custom datasets, multilingual coverage, or ready-to-use corpora, our team will design the right solution for your AI goals.