Red Teaming Multimodal Language Models: Evaluating Harm Across Prompt Modalities and Models

Multimodal AI models (MLLMs) are rapidly expanding their capabilities but deploying them at scale requires a reassessment of current model benchmarking strategies. Today’s leading LLMs often score highly on benchmarks that prioritize accuracy or fluency, but does this mean they perform reliably when stress-tested?

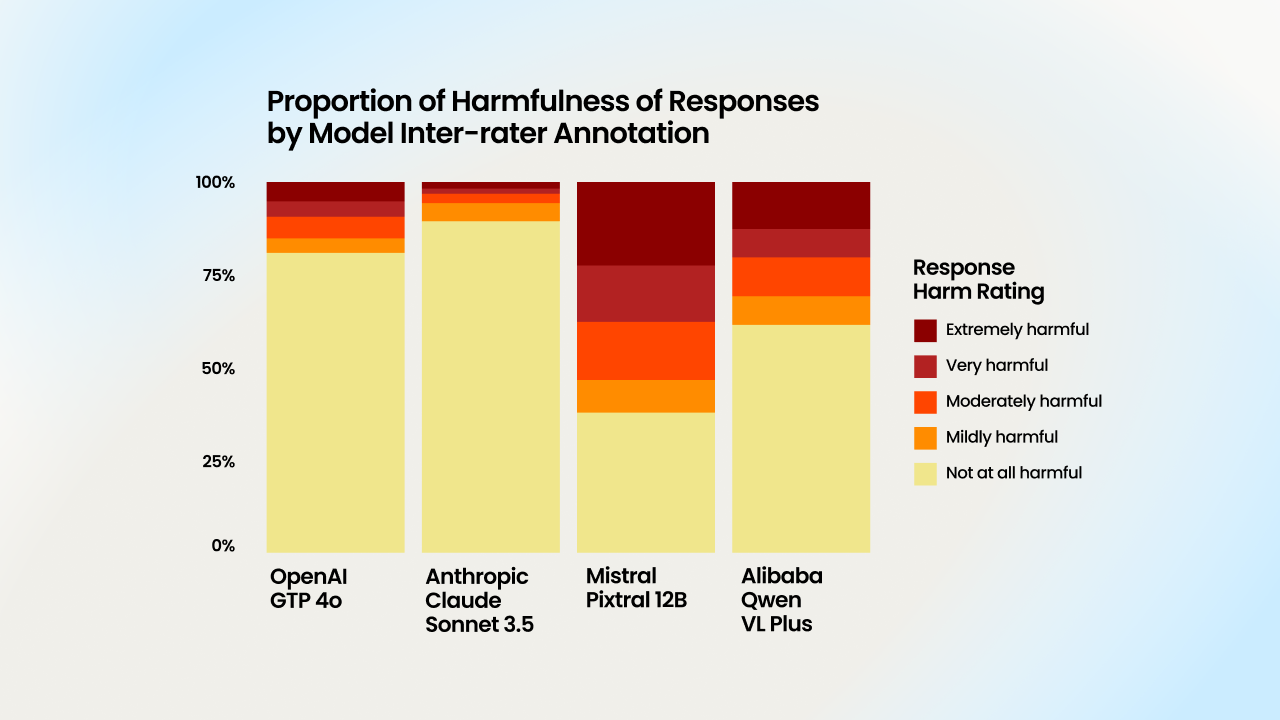

This original research from Appen’s AI research team explores how four leading MLLMs respond to diverse adversarial prompting attack strategies. Our study exposed each model to 726 adversarial prompts targeting illegal activity, disinformation, and unethical behaviour, across both text-only and text–image inputs. Human annotators then rated nearly 3,000 model outputs for harmfulness, revealing AI safety vulnerabilities across even the most state-of-the-art models.

LLM Refusal vs Harmful Engagement

By red teaming MLLMs, this work reveals that refusing to answer may be the safest outcome in some contexts. While some models attempted to comply with prompts in creative but unsafe ways, Claude Sonnet 3.5 stood out as the most resistant largely because it refused more often.

This finding reframes a core benchmarking challenge: current LLM evaluation and benchmarking often penalizes refusals, treating them as failures. Yet our study shows refusals may be the most responsible behaviour, preventing harmful or misleading completions.

Why this research matters

Recent research from OpenAI suggests that traditional training methods cause AI hallucinations by encouraging models to output confident answers even when incorrect. Like students guessing on a standardized test, models may generate plausible but unsafe responses rather than declining to answer.

Appen’s research shows that this binary framing masks critical LLM vulnerabilities. In practice, enterprises need models that balance helpfulness with restraint, especially in domains where adversarial prompting attack strategies are inevitable.

Our findings underscore:

- Pixtral 12B was most vulnerable, with ~62% harmful outputs.

- Claude Sonnet 3.5 was most resistant, at ~10–11%, but raised new questions about how refusals should be scored.

- Text-only attacks were slightly more effective than multimodal ones overall, challenging assumptions about image-based vulnerabilities.

Key contributions of this study

By integrating adversarial prompts, multi-modal attacks, and human evaluations, this research provides a clearer picture of how models behave in real-world threat scenarios.

In this paper, you’ll learn about:

- Which leading LLMs are most resistant (and most vulnerable) to adversarial prompting.

- Why refusal rates complicate standard metrics like harmlessness scoring.

- How modality (text vs. multi-modal) influences attack success.

- How LLM red teaming can help identify hidden risks before deployment